Let's Discuss

Enquire NowIn Statistics there are two schools of thought with respect to statistical inference: Bayesian and Frequentist approaches. Before we dive into the comparison and how these schools of thought define different approaches to different types of problems in Data Science, let’s have a brief overview of what these terms mean.

If you are fluent in Statistics, this explanation would be helpful, from Larry Wasserman’s notes on Statistical Machine Learning (with disclaimer: “at the risk of oversimplifying”) :

Frequentist versus Bayesian Methods

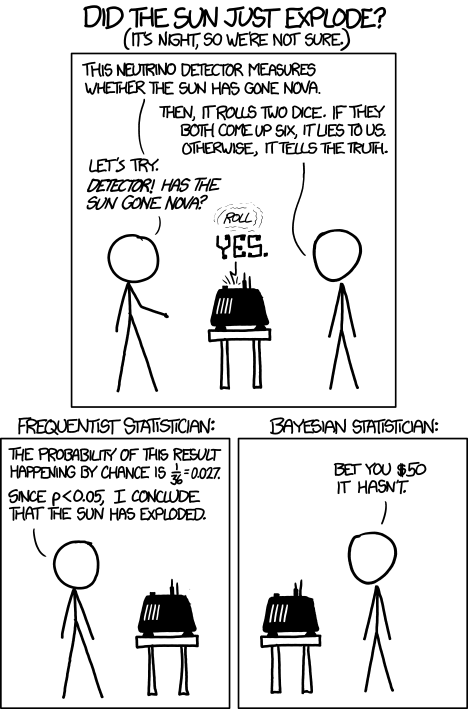

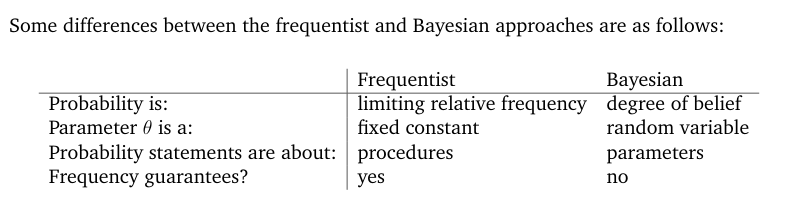

- In frequentist inference, probabilities are interpreted as long run frequencies. The goal is to create procedures with long run frequency guarantees.

- In Bayesian inference, probabilities are interpreted as subjective degrees of belief. The goal is to state and analyze your beliefs.

If you are still confused, here’s a more approachable definition:

Frequentist Inference:

In Frequentist approaches the observed data is sampled from some distribution and the maximum likelihood of the data with respect to the specific distribution.

P(Data|θ) is the likelihood of the event occurring in the specific distribution and θ is a constant. Logistic and linear regressions are examples of statistical models which use frequentist inference.

Bayesian Inference:

This is basically how humans are wired to think about probability. In Bayesian Statistics probability is measured by the subjective degree of belief but this also lends to the critique that different beliefs (priors) could lead to different conclusions (posteriors). If P(Data|θ) is the likelihood of the event occurring, then in Bayesian approaches θ is treated as a variable, and prior distribution of the hypotheses P(θ) is also assumed.

Still confused?

Don’t worry, here’s a more simplified version that you could explain to a 10 year old:

Imagine you misplaced your phone in your house, so you call your phone from another phone, how would you go about finding it, which area of your home would you start searching?

The Frequentist Reasoning would go like this: You hear the phone beep, you have a mental model of where the sound is coming from and you infer the location from the same.

Now the Bayesian reasoning: You hear the phone beep, in addition to the mental model of where you hear the beep, you also have knowledge of the areas in your home where you have misplaced the phone in the past and you incorporate this knowledge to infer the location from where to start searching.

Image Source: https://www.explainxkcd.com/wiki/index.php/1132:_Frequentists_vs._Bayesians

Comparison

- Uncertainty: In estimating parameter uncertainty the two approaches vary widely: Frequentist approaches use null hypothesis and confidence intervals to ascertain uncertainty, probabilities are not assigned to parameter values unlike Bayesian methods where there exists posterior distribution over parameter values. Frequentists use Maximum Likelihood Estimation to get point estimates of unknown parameters, probabilities to unknown parameters don’t get assigned, while Bayesians get uncertainty of parameter values estimates by integrating full posterior distribution.

- Computational Cost: Frequentist methods are less computationally intensive than Bayesian methods due to integration over many parameters.

Image Source: https://www.stat.cmu.edu/~larry/=sml/Bayes.pdf

So what should you use?

It’s entirely up to the problem definitions/domains, the type of predictions we want, and the availability or lack thereof of priors that can be incorporated. Ultimately there’s not a consensus on which method is better, frequentism has dominated the field of statistics for over a century but with the advent of faster computers the more computational cost of Bayesian methods have become less of a disadvantage, there are algorithms which fare better when frequentism is used over Bayesian methods and vice versa.

In conclusion you could find strong arguments for and against each side but ultimately, like this nytimes article said: “Bayesian statistics, in short, can’t save us from bad science.”

Have a project in mind that includes complex tech stacks? Dexlock aims to connect with futuristic thinkers for a technologically advanced world. Join hands with us to make your aspirations real. Contact here.

Disclaimer: The opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Dexlock.