Let's Discuss

Enquire NowNumba is an open-source, NumPy-aware optimizing compiler for Python sponsored by Anaconda, Inc. It operates on the array and numerical functions that give you the power to speed up your applications with high performance functions written directly in Python. Numba translates Python functions to optimized machine code at runtime using the industry-standard LLVM compiler library, boosting numerical algorithms in Python to approach the speeds of C & FORTRAN.

Features of Numba

- Accelerates python numerical functions by providing different invocation modes that trigger differing compilation options and behaviors.

- No requirement of running a separate compilation step or replacing the python interpreter. Just apply one of the Numba decorators to your Python function, and Numba does the rest.

- Built for scientific computing, used with NumPy arrays and functions. Numba generates specialized code for different array data types and layouts to optimize performance.

- Works great with Jupyter notebooks for interactive computing, and with distributed execution frameworks, like Dask and Spark.

- Helps in parallelizing algorithms by offering a range of options for parallelizing your code for CPUs and GPUs, often with only minor code changes, providing simplified threading, GPU acceleration, and SIMD vectorization.

- On the fly code generation preference

- It generates native code for the CPU (default) and GPU hardware

- Integration with the Python scientific software stack

Numba – Working

Numba considers the types of input arguments that get passed into a decorated function and combines this info with the Python bytecode it reads for the function. After optimizing and analyzing the code, it uses the LLVM compiler library for generating a machine code version of the function, tailored according to the CPU capabilities. Then every time the decorated function is called, this compiled version is used.

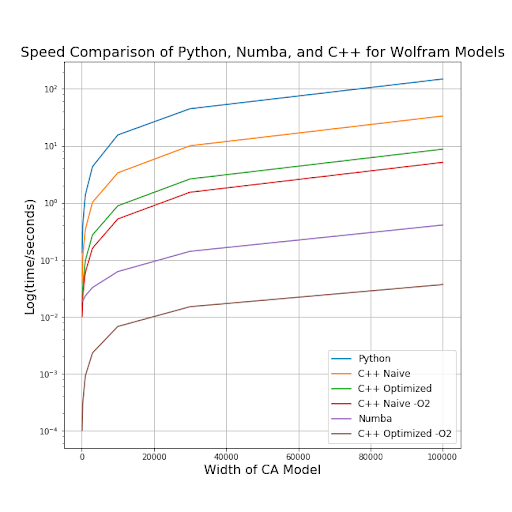

How fast is Numba?

If we make the assumption that Numba can operate in python-mode, or at least gets to compile some loops, it targets compilation according to the specific CPU on which it is being run. So depending on the application, the speed-up varies but can be one to two orders of magnitude. Numba also provides a performance guide that shows some general options for getting an extra performance.

Examples

The code below demonstrates the potential performance boost when using the nogil feature. For example, on a 4-core machine, the following results were printed:

NumPy (1 thread) 145 ms

numba (1 thread) 128 ms

numba (4 threads) 35 ms

import math import threading from timeit import repeat import numpy as np from numba import jit nthreads = 4 size = 10**6 def func_np(a, b): """ Control function using Numpy. """ return np.exp(2.1 * a + 3.2 * b) @jit('void(double[:], double[:], double[:])', nopython=True, nogil=True) def inner_func_nb(result, a, b): """ Function under test. """ for i in range(len(result)): result[i] = math.exp(2.1 * a[i] + 3.2 * b[i]) def timefunc(correct, s, func, *args, **kwargs): """ Benchmark *func* and print out its runtime. """ print(s.ljust(20), end=" ") # Make sure the function is compiled before the benchmark is # started res = func(*args, **kwargs) if correct is not None: assert np.allclose(res, correct), (res, correct) # time it print('{:>5.0f} ms'.format(min(repeat( lambda: func(*args, **kwargs), number=5, repeat=2)) * 1000)) return res def make_singlethread(inner_func): """ Run the given function inside a single thread. """ def func(*args): length = len(args[0]) result = np.empty(length, dtype=np.float64) inner_func(result, *args) return result return func def make_multithread(inner_func, numthreads): """ Run the given function inside *numthreads* threads, splitting its arguments into equal-sized chunks. """ def func_mt(*args): length = len(args[0]) result = np.empty(length, dtype=np.float64) args = (result,) + args chunklen = (length + numthreads - 1) // numthreads # Create argument tuples for each input chunk chunks = [[arg[i * chunklen:(i + 1) * chunklen] for arg in args] for i in range(numthreads)] # Spawn one thread per chunk threads = [threading.Thread(target=inner_func, args=chunk) for chunk in chunks] for thread in threads: thread.start() for thread in threads: thread.join() return result return func_mt func_nb = make_singlethread(inner_func_nb) func_nb_mt = make_multithread(inner_func_nb, nthreads) a = np.random.rand(size) b = np.random.rand(size) correct = timefunc(None, "numpy (1 thread)", func_np, a, b) timefunc(correct, "numba (1 thread)", func_nb, a, b)

Performance enhancement methods:

-

No Python mode vs Object mode

One of the general ways for performance enhancement is decorating the functions with @jit which is the most flexible decorator offered by Numba. It has two modes of compilation, first, it tries to compile the decorated function in no python-mode. In case this isn’t successful, it tries to compile the function in object mode. Compiling the functions under no python-mode is really the key to good performance although loop lifting in object mode can enable some performance boost. Decorators @njit and @jit(nopython = True) can be used to ensure only no python-mode is used and to raise an exception if compilation fails.

-

Loops

The performance can be enhanced when using loops too. Numba is perfectly happy working with loops while NumPy has developed a strong idiom around the use of vector operation. In Numba, users familiar with C (or Fortran) can write Python in this style and it will work fine.

-

Fast math

Strict IEEE 754 compliance is less important and significant in certain classes of applications. So, we can relax some numerical rigor with the view of gaining additional performance. We can achieve this behavior in Numba by using keyword argument – fast math.

-

Parallel=True

Numba can compile a version that will run in parallel on multiple native threads (no GIL!) if the code consists of operations that are parallelizable (and supported) . Simply adding the parallel keyword argument ensures parallelization is performed automatically.

-

Intel SVML

A large number of optimized transcendental functions which can be used as compiler intrinsics are available in the short vector math library (SVML), provided by Intel.SVML provides both high and low accuracy versions of each intrinsic and the version that is used is determined through the use of the fast math keyword. Numba automatically configures the LLVM back end to use the SVML intrinsic functions wherever possible if the icc_rt package is present in the environment (or the SVML libraries are simply locatable).

-

Linear Algebra

Numba supports most of numpy.linalg in no Python mode. The internal implementation has a dependency on a LAPACK & BLAS library to do the numerical work and it obtains the bindings for the necessary functions from SciPy. So, to achieve good performance in numpy.linalg functions with Numba it is essential to use a SciPy built against a well-optimized LAPACK/BLAS library. For example, Numba makes use of this performance in the case of the Anaconda distribution SciPy, built against Intel’s MKL which is highly optimized.

How to measure Numba’s Performance?

First, Numba compiles the function for the argument types given before it executes the machine code version of the method. This takes some time.But once the compilation has taken place, Numba caches the machine code version of the function for the particular types of arguments presented. If the function is called again with the same types, it reuses the cached version instead of compiling it again.One of the common mistakes when measuring Numba’s performance is to time code once with a simple timer that includes the time taken to compile your function in the execution time and not considering the above behavior.

Other useful decorators in Numba

- @vectorize – produces NumPy ufunc s (with all the ufunc methods supported).

- @guvectorize – produces NumPy generalized ufunc s.

- @stencil – declare a function as a kernel for a stencil-like operation.

- @jitclass – for jit-aware classes.

- @cfunc – declare a function for use as a native call back (to be called from C/C++ etc).

- @njit – this is an alias for @jit(nopython=True) as it is so commonly used!

- @overload – register your own implementation of a function for use in nopython mode, e.g. @overload(scipy.special.j0).

Summary

So to summarize, Numba is a great tool to enhance performance. Numerical functions in Python significantly which doesn’t require a separate compilation step as such and has several features well suited for computational efficiency and scientific research. It also helps in parallelizing algorithms using multi threads. The major setback is that it is limited to numerical and computational domains.

Have a project in mind that includes complex tech stacks? We can be just what you’re looking for! Connect with us here.

Disclaimer: The opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Dexlock.