Let's Discuss

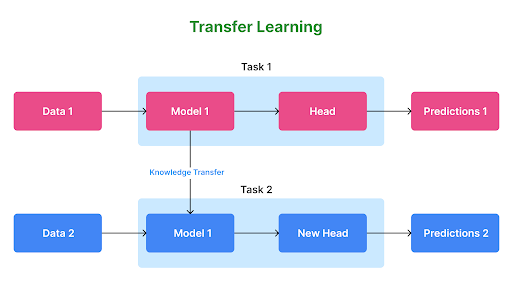

Enquire NowTransfer learning is a sub-field of Machine Learning. It focuses on the ability to use knowledge learned from one task to improve the performance of another related task. This is particularly useful when there is limited data for a particular task. It allows the model to use the knowledge learned from a related task to make better predictions. In recent years, transfer learning has been applied to many different areas of Machine Learning. Some of which include Computer Vision, Natural Language Processing, and Reinforcement Learning. It has been used to improve the performance of image classification models, language translation models, and gaming agents, among others.

Benefits and Applications of TL

To train a neural model from scratch, a lot of data is typically needed. However, access to that data isn’t always possible – this is when transfer learning comes in handy. Since the model has already been pre-trained, a good ML model can be generated with fairly little training data using Transfer Learning. This is especially useful in NLP where huge labeled datasets require a lot of expert knowledge. Additionally, the training time is decreased since building a deep neural network from the start of a complex task can take days or even weeks. Thus Transfer Learning gives an advantage by creating a better initial model and a faster training, higher learning rate and accuracy after training.

Some of the practical applications of TL are in

- Computer Vision: Transfer learning is widely used in computer vision tasks, such as image classification, object detection, and semantic segmentation. Pretrained models can be fine-tuned on new datasets to achieve improved results in these tasks.

- Natural Language Processing: Transfer learning has also been applied to NLP tasks, such as text classification, language translation, and named entity recognition.

- Audio and Speech Processing: Transfer learning can improve the performance of speech recognition systems, speaker recognition, and audio classification. Pretrained models, such as those trained on speech datasets, have been fine-tuned to achieve high accuracy on new datasets.

- Recommender Systems: Transfer learning has been applied to recommender systems to learn users’ preferences across different domains or platforms.

- Medical Image Analysis: Medical image analysis tasks like cancer diagnosis leverage knowledge from related tasks to improve the performance of the model.

- Robotics: Transfer learning is expected to play a key role in the development of autonomous robots. It allows these systems to learn from a small amount of data and adapt to new tasks quickly.

- Healthcare: Transfer learning is expected to be widely adopted in medical imaging and analysis. It can help to improve diagnosis and treatment decisions.

Challenges of TL

Despite its many benefits, transfer learning still faces several challenges. Some of the main challenges include:

- Domain Shift: Transfer learning assumes that the source and target domains are related, but this is not always the case. When there is a significant difference between the two domains, transfer learning may not be effective.

- Overfitting: Pretrained models may be overfitted to the source domain. This can reduce their generalization ability to new target domains. The fine-tuning process needs to be carefully controlled to avoid overfitting, such as using regularization techniques and early stopping.

- Task Compatibility: Transfer learning relies on the compatibility between the source and target tasks. Some tasks may be too different to transfer knowledge effectively.

- Data Bias: The performance of a transfer learning model can be affected by the bias present in the source dataset. If the source dataset is biased, the model may learn biased representations that do not generalize well to the target dataset.

- Transferability: Some models may not be easily transferable, especially those that use specialized architectures or have been trained on specific datasets.

Addressing these challenges requires careful consideration of the source and target domains, selection of appropriate models, fine-tuning processes, and monitoring of model performance.

Conclusion

Overall, transfer learning has revolutionized many modern applications of machine learning, improving performance, reducing the need for large labeled datasets, and accelerating model development. As research in transfer learning progresses, we can expect to see more advanced models and further expansion of its applications in various industries.

Ideas for innovative projects buzzing in your mind? We can be your best development partner. Connect with us here to start something great!

Disclaimer: The opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Dexlock.