Let's Discuss

Enquire NowChatbots, also known as chatterbots, were created originally as attempts to simulate human conversations. The first chatbot ELIZA tried to simulate a Rogerian psychotherapist. Although ELIZA was quite successful at mimicking a psychotherapist, it had difficulty understanding the user’s phrases and just included a small set of manually created patterns and typical replies given to the user’s input. Nevertheless, it has inspired the tech community to build a system that can pass Turing’s Imitation Game The aim was to see if a machine can display intellectual behaviour like a human by having a conversation with each other. The machine has to act in a way that the human is tricked into thinking that it was from another person.

Chatbots use has been increasing steadily, especially in the areas of education, e-commerce, and information retrieval. Chatbots can well replace services provided by humans in call centres. The chatbots traditionally were handcrafted using scripting languages such as Artificial Intelligence Markup Language(AIML) and ChatScript. The recent innovations in Conversational AI have helped chatbots reach a level further. By training open-domain chatbots such as Google’s Meena, Facebooks’s BlenderBot, and OpenAI’s GPT models from very large datasets of conversations using neural dialogue technologies, it can reach near-human level accuracy and reliability.

What difference does it make?

Chatbots are transforming the way businesses interact with their customers. Businesses around the world can scale their service to customers by bringing in these tools. A new case at a customer service centre takes from 8 to 45 minutes, depending on the product or service type. Usually, the customer service call centres spend up to $4,000 for every agent they hire, while experiencing employee turnover of 30–45%. The annual spending for this type of service in the United States alone is nearly $62 billion. AI chatbots can help with this problem.

Throughout 2020, many private and public entities built chatbots and other assistants to handle questions related to the novel coronavirus. These assistants fielded most responses through a simple question-and-response format. Most of these chatbots included symptom checker functionality through which the users were able to self-diagnose and socially distance themselves.

Typical Dialogue System architecture

When a user sends textual input to an AI assistant, that input is understood in the context of intent (what the user wants to achieve) and optionally some parameters that support that intent. We’ll call these parameters entities.

The general architecture includes four components:

- Interface: The way end-users interact with the assistant. This is the only part of the system that is visible to the user.

- Dialogue Engine: This module manages the dialogue states and acts as a coordinator between the NLU layer and the orchestration layer.

- Natural language understanding (NLU): This module is invoked by the dialogue engine and it extracts meaning from the user’s natural language input. Various machine learning and deep learning models are used to achieve this. The module will identify not only the intent but also the parameters associated with it.

- Orchestrator (Optional): Coordinates calls to APIs and databases to drive business processes and provide dynamic response data.

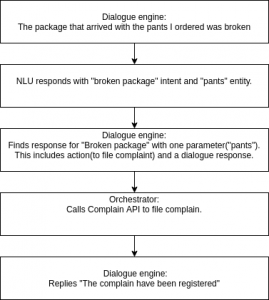

Consider an example of an online shopping assistant bot. The user gives input “The package that arrived with the pants I ordered was broken”. These are the functions that go in the background:

There have been mainly two phases in history to improve the traditional dialogue systems. In the first phase, efforts were directed toward the use of machine learning techniques to optimize the components of the architecture, In particular the Natural Language Understanding (NLU), Dialogue Manager (DM), and Natural Language Generation (NLG) components.

In the second phase, a different approach was introduced, known as end-to-end neural dialogue systems. Here Deep neural networks are used to map input utterances directly to the output without any processing by the intermediary traditional modules.

End-End Neural Architecture

Traditional architecture suffers from some limitations. It is difficult to determine which module is responsible for the failure or low performance of the system. For example, if the response to a certain query was not very good, was the problem due to a poor natural language understanding, inability to choose the best action by the dialogue manager, some bug in the external APIs, or failure in the natural language generation module?.

Another issue is that optimizing one module can lead to a knock-on effect on the other modules. Joint optimization is more effective than individually optimizing the modules.

Similarly, there are issues when adapting the system to new domains as the entire system should be trained and tuned individually.

Various components in traditional architecture are not required in end-end architecture. Instead, there is a direct mapping between input and output. The mapping is referred to as sequence to sequence mapping or Seq2Seq. This technique can be used in various domains such as Automated email replies, open domain chatbots, language translation, etc.

There are mainly two major tasks in an end-end architecture.

- Process the input and represent it in a quantifiable manner: This process is known as encoding.

- Generate the output by processing these encodings: This process is known as decoding.

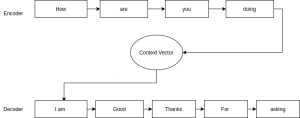

The “How are you doing” is processed by a model that reads one token at a time until it reaches the sentence’s end. The hidden state of the model is called the context vectors or thought vectors. It contains the information about the sentence up to that particular token. The model is trained to map the input to output such as “I am Good. Thanks for asking”.

Encoding network usually involves neural networks such as LSTM, GRU, or more recently transformer networks. While the encoder-decoder network

has been applied successfully to machine translation, it is more difficult in dialogue applications as there can be a wide range of appropriate responses to the input in dialogue as opposed to the phrase alignment between the source and target sequences in machine translation. The dialogues can also be conditional on information from another API or database.

For decoding in dialogue systems representations are learned from corpora of dialogues and these are used to generate appropriate contextualized responses based on the last hidden state of the context vector that is mapped to a probability distribution over the next possible tokens. The technique used to produce an output sequence is called autoregressive generation.

One problem with encoder-decoder networks is that performance decreases as the input sequence become longer. Thus longer conversation’s context can be hard to keep track of. To address this problem, the attention mechanism was introduced, which takes account of only the part in the input that is considered relevant. The transformer architecture is the most widely used based on this mechanism.

TASK-ORIENTED NEURAL DIALOGUE SYSTEMS

Initially, end-to-end dialogue learning was applied to an open-domain conversational(chit-chat) system with promising results. However task-oriented systems present additional challenges. The system not only has to correctly identify the user’s intent and find a response, but it also has to ask a series of questions based on the intent to elaborate or clarify the user’s request, then issues one or more queries to the database or APIs to obtain the required information to present to the user. Another challenge is the dataset to be trained on. While generic dialogue systems can draw datasets from a wide range of open domain corpora to train the system, fewer datasets are available for task-oriented systems and often are proprietary and not publicly available. Even with corpora available, it is necessary to distinguish between generic features that can be learned and those features that are only applicable in a particular domain.

There have been several attempts to address task-oriented dialogue systems. Li et al investigated the interaction of an end-to-end neural dialogue system for movie-ticket booking with a structured database and found out that the system outperformed the baseline rule-based system.

Wen et al introduce an end-to-end task-oriented dialogue system consisting of networks for encoding intent, policy decisions, belief tracking, database operations, and generation. The database operator has an explicit representation of database attributes as slot-value pairs. The input here is converted into two internal representations, a distributed representation generated by an intent network, and the belief state, which is a probability representation over slot-value pairs. The slot-value pairs are used for generating the query to the database, and then the policy network uses the search result combined with the intent representation and the belief state to generate a single vector that determines the system’s next action. This vector is used for the response generation to produce a template response that is subsequently completed by inserting the actual values retrieved from the database into the skeleton response.

The lack of training data for task-oriented systems using a crowdsourced version of The Wizard of Oz method to generate dialogues for training. The end-to-end task-oriented systems approach is still in its infancy and there are still many issues to be addressed. For example, the system doesn’t address errors in recognition and deal with subsequent uncertainty in the belief state.

Platforms to develop your chatbots

Several platforms allow you to build your chatbot without worrying about the underlying technologies.

MICROSOFT

Microsoft offers a full catalogue of software services that you can use to build your solution. Microsoft offers two Azure Cognitive Services: Language Understanding Intelligent Service for natural language understanding and Bot Framework for dialogue and response. The BotBuilder SDKs are used to implement the business logic into your chatbot. BotBuilder SDKs support languages such as C#, Javascript, Java, and Python.

AMAZON

Amazon also has multiple software services for building chatbots. The primary service used for building AI assistants is Amazon Lex. It integrates easily with Amazon’s other cloud-based services as well as external interfaces. Amazon Lex bots can be easily published to messaging platforms like Facebook Messenger, Slack, Kik, and Twilio SMS. Lex also provides SDKs for IOS and android app development.

Google through its google cloud platform provides a variety of software services in its catalogue and many prebuilt integration points. Google’s Dialogflow is the primary service that can be used to build conversational AI systems. Several other services are available for building your event Classifiers.

RASA

Rasa gives you full control of the software you deploy. The other vendors usually give you control of the classifier by changing training data, but with Rasa, you can customize the entire classification process. Chatbots can be deployed on multiple platforms like FB messenger, Microsoft bot slack, etc.

IBM

IBM offers a platform with easily integrated services. Watson Assistant is IBM’s AI assistant platform, and it is suitable for building chatbots as well as other types of assistants. Some studies show that Watson has higher benchmark scores than other commercial internet detection services.

If you would like to know more about our services regarding Ai, click here.

Disclaimer: The opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Dexlock.