Let's Discuss

Enquire NowGPT-3’s Closest Competitor is here: Meta AI Open Sources OPT-175B Parameter

Language Model.

Before we get into our topic, we must have a brief about what GPT-3 is.

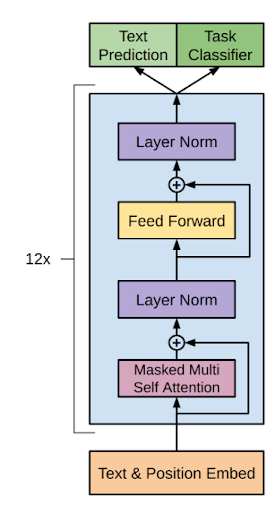

GPT-3 is a language model developed by OpenAI. It is semi-supervised learning where they have used a transformer’s decoder block.

Model outputs one token at a time, after each token is produced, it is added to the sequence of inputs and that new sequence becomes input to the model in its next step.

What makes GPT-3 special is that it also has a 175B parameter and it is only accessible using a paid API.

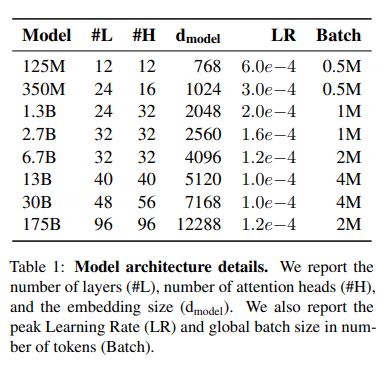

Getting into our topic, The OPT-175B model has over 175 billion parameters and was trained using public datasets very similar to GPT-3 Architecture. Since the model is open-sourced, they invite many researchers to large language models. The use of the language model is that they will be able to perform many NLP tasks such as writing articles, solving math problems, question answering and more.

Now researchers can test models of how it behaves at scale. Pretrained weights of models come in different variants like 125M, 250M, 1.3B, 2.7B, 6.7B, 13B and 30B.

Comparison to GPT-3

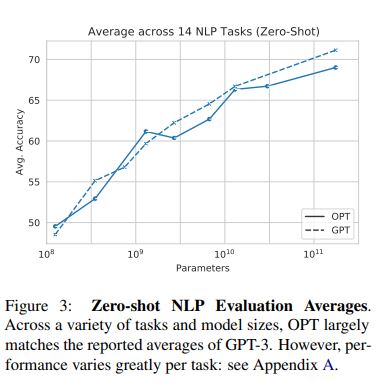

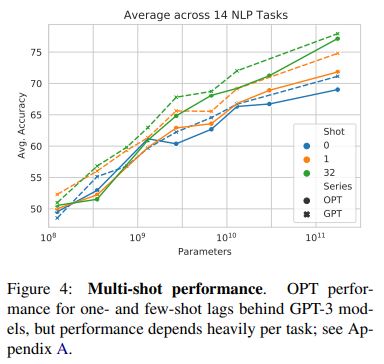

OPT-3’s average performance follows the trend of GPT-3. However, performance can

vary radically across the tasks,

OPT-3’s performance roughly matched GPT-3 for 10 tasks, and underperformed in 3 tasks (ARC Challenge and MultiRC). In some of the tasks like (CB, BoolQ, WSC), both GPT and OPT models were not upto the mark due to less data in validation. In WIC task, OPT model outperformed GPT models.

To make use of the publicly available code, click here.

Limitation:

OPT-175B tends to be repetitive and can easily get stuck in a loop.

OPT-175B also has a high propensity to generate toxic language and reinforce harmful stereotypes

It can also produce factually incorrect statements. It is harmful in applications where accuracy, and precision matter such as healthcare and scientific discovery.

In summary, we hope that the public release of the OPT models will enable many more researchers to work on these important issues. Our team is here to help you with any queries, if you would like to know more, click here .

Disclaimer: The opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Dexlock.