Let's Discuss

Enquire NowGoogle’s latest LLM, the Pathways Language Model or PaLM, achieves state-of-the-art few-shot performance across most tasks by significant margins after being evaluated on hundreds of language understanding and generation tasks.

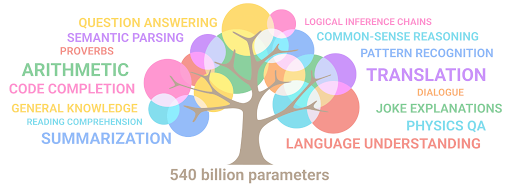

The Pathways Language Model (PaLM), a dense decoder-only Transformer model trained with the Pathways system, contains a whopping 540 billion parameters. PaLM allows to effectively train a single model across several TPU v4 Pods. PaLM achieves a training efficiency of 57.8% hardware FLOPs utilization, the highest yet achieved for LLMs at this scale, by combining the parallelism strategy, a reformulation of the Transformer block that allows the attention and feedforward layers to be computed in parallel, and speedups from TPU compiler optimizations.

Let’s look at PaLM in detail.

Image Credits: ai.googleblog.com

Trained on 540 billion parameters, PaLM can carry out a variety of NLP tasks like:

- Text to code conversion

- Solving word problems in math

- Describing a joke

- Common sense reasoning

- Translations

- And more…

A notch above other LLM’s like GPT3 (175 billion parameters) and Megatron-Turing NLG (530 Billion), PaLM is a dense model where all parameters have been used during inference. PaLM shows the largest TPU-based system configuration used for training to date, scaling training to 6144 chips, for the first time at a huge scale. Two Cloud TPU v4 Pods are used to scale the training, and standard data and model parallelism is used within each Pod to do so. In the past, LLMs were trained on a single TPU v3 Pod, expanded to 2240 A100 GPUs across GPU clusters using pipeline parallelism, or on multiple TPU v3 Pods with a maximum scale of 4096 TPU v3 chips.

The dataset used to train the model has a total size of 780 billion tokens and includes high-quality web content such as filtered web pages, multilingual social media chats, GitHub scripts, Wikipedia, news, and English-language books, with a lossless vocabulary that is noted for maintaining white spaces and separating off vocabulary Unicode characters into a bind. Only five per cent of the dataset used to train PaLM is related to coding.

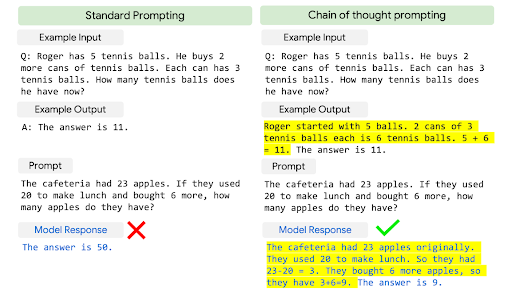

Based on this, it is evident that PaLM can write OS code using 196 GB of source code from various programming languages, including Java, JavaScript, HTML, Python, C, and C++. It can not only handle multiple languages but also convert code written in one language into another. PaLM’s impeccable performance is a result of high scalability combined with a new concept known as ‘Chain of Thought Prompting’. Using this technique, the model is tasked to give an answer along with the reason or solution with which it arrived at that answer. In addition, it has been proven to significantly improve accuracy.

Image Credits: ai.googleblog.com

There are three versions of PaLM, which benefit from scaling:

- PaLM of 8 billion parameters

- PaLM of 62 billion parameters

- PaLm of 540 billion parameters

Another technique used to train this method is termed ‘Few Shot Prompting’.

The Few Shot Approach is based on the human ability to utilize prior knowledge and understanding to solve a new problem when it arises. In PaLM, the model is prompted with a few solved examples which are pre-appended to the input, with which it solves any new problem.

PaLM provides a datasheet, model card, and Responsible AI benchmark results in addition to presenting thorough evaluations of the dataset and model outputs for biases and risks. While the analysis helps to highlight some of the model’s potential dangers, analysis that is task- and domain-specific is necessary to accurately calibrate, contextualize, and reduce potential risks. Research is still being done to better understand the risks and advantages of these models as well as to create scalable solutions that help prevent malicious language model usage.

Conclusion

PaLM effectively demonstrates the scaling capacity of the Pathways system to thousands of accelerator chips over two TPU v4 Pods by training a model with 540 billion parameters using a well-researched, well-established recipe of a dense decoder-only Transformer model. By pushing the limits of the model scale, PaLM offers ground-breaking few-shot performance across a variety of natural language processing, reasoning, and coding applications. PaLM makes way for ever more potent models by merging distinctive architectural options and training methods with scaling capabilities.

Much like Google, Dexlock strives to maintain significant expertise in the contemporary technologies on the market. We provide the best services to other futurist thinkers who want to make a difference with our knowledge in AI, ML, Big Data, Data Science, and other relevant topics. Considering a project similar to PaLM? To make your aspirations a reality, get in touch with us here.

Disclaimer: The opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Dexlock.