Let's Discuss

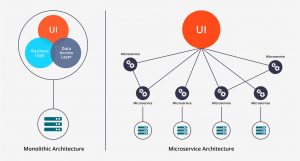

Enquire NowWhat is a microservice?

If you don’t know what is meant by a MicroService, you must be at least familiar with the word MicroService. More and more developers are embracing fine-grained microservices architecture.

In brief – ‘Microservices are small, autonomous services that work together’.

Let’s analyze the definition :

- Microservices are small, individual services each of which focuses on doing one specific functionality.

- In MicroService architecture, each component can interact with each other using whatever protocol eg: REST

- Since components are individual, we can use any technology – language for developing each service.

- Each component is developed, deployed, and delivered independently, thus reducing the complexity of deployment.

Vert.x

Eclipse Vert.X is a non-blocking, event-driven toolkit for creating reactive applications on the JVM. It was created with asynchronous communication in mind. The event-driven and non-blocking design of Vert.X allows it to handle a large number of concurrencies with a small number of kernel threads.

Network communication is carried out under the Synchronous I/O threading model by assigning a single thread to a client, which means that the thread is not free until the client disconnects. Vert.X, on the other hand, allows you to create code as a single thread application because it uses an event-based programming style.

The essential APIs for developing asynchronous networked apps are defined in the Vert.X core library, which also supports polyglot applications. This means that your application can support multiple programming languages.

The core concepts of vert.x is:

- Verticle

- Event loop

- Event bus

Verticle

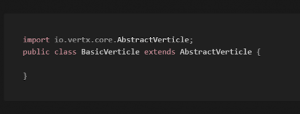

The term verticle refers to the component that can be deployed to Vert.X. A verticle is similar to a Servlet in Java in some ways. They enable you to encapsulate your code for different purposes, and they can run independently of one another.

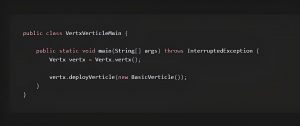

Create a class that extends io.vertx.core.AbstractVerticle to implement it. Here’s an illustration of a Verticle class:

Vert.x supports 3 different types of verticles:

- Standard Verticles

- Worker Verticles

- Multi-threaded Worker Verticles

Standard Verticles are the most common and useful type, and they are executed using an event loop thread.

A thread from the worker pool executes worker vertices. It is never executed concurrently by more than one thread.

Multi-threaded Worker Verticles, like Worker Verticles, are executed by a worker pool thread, but they can be executed concurrently by multiple threads.

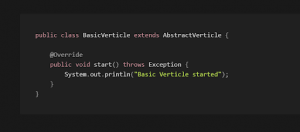

start()

AbstracVerticle has a start() method that you can override in your verticle class. Vert.X calls the start() method when the verticle is deployed and ready to start.

You initialize your verticle in the start() method. In most cases, the start() method will be used to create an HTTP or TCP server, register event handlers on the event bus, deploy other verticles, or do whatever else your verticle requires to function.

Another version of start() in the AbstractVerticle class takes a Future as a parameter. This Future can be used to asynchronously notify Vert.x if the Verticle was successfully deployed.

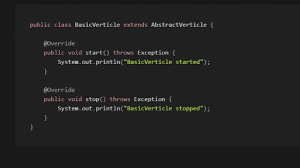

Stop()

You can also override the stop() method in the AbstractVerticle class. When Vert.x shuts down and your verticle needs to stop, the stop() method is called.

Here’s an example of how you can override the stop() method in your own verticle:

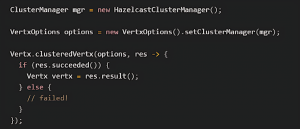

Deploying a Verticle

Once you have created a Verticle, you need to deploy it inside Vert.X in order to execute it.

Here’s how you deploy it.

Here, initially, a Vert.X instance is created. Then the deployVerticle() method of the Vert.X instance is called. Once Vert.X deploys the BasicVerticle, the start() method of the verticle is called.

The verticle will be deployed asynchronously which means, that it won’t be deployed by the time the deployVerticle() method returns.

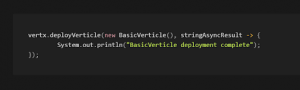

If we want to know exactly when a verticle is deployed, we can provide a handler while deploying it.

using Java-lambda expression:-

Event Loop

The event loop design pattern is used to implement the Reactor design pattern.

The main purpose of the event loop is to continuously check for new events and dispatch them to someone who knows how to handle them. However, we are not making the best use of hardware by using only one thread to consume all events. The event loop should not be blocked because it will be unable to do anything else if this occurs. This means that if you block all of the event loops in your Vert.x instance, your application will come to a halt.

Vert.X employs multiple threads, which consume fewer system resources.

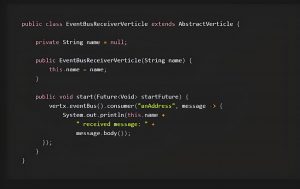

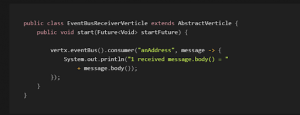

EventBusReceiverVerticle class:

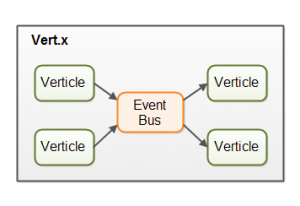

Event Bus

A vert.X event bus is a lightweight distributed messaging system that allows different parts of your application or different applications and services to communicate with each other in a loosely coupled way.

Various modes of communication supported by the event bus are:

- point-to-point messaging

- request-response messaging

- publish/subscribe for broadcasting messages

The below diagram explains EventBus in an easier way.

Listening for Messages

When a verticle wants to listen for messages from the event bus, it listens to a certain address.

An address is just a name that you can choose freely.

Multiple verticles can listen to messages on the same address. This means that the address is not unique to a single verticle. An address is more like the name of a channel that you can communicate via. Multiple vertices can listen for messages on an address, and also send messages to an address.

A verticle can obtain a reference to the event bus via the vert.x instance inherited from AbstractVerticle

Listening for a message on a given address can be accomplished by this EventBusReceiverVerticle

This example shows a verticle that registers a consumer of messages on the Vert.X event bus. The consumer is registered with the address, meaning it consumes the messages that are sent to this address via the event bus.

Sending messages

Messages can be sent over the event bus using either the send() or publish() methods. The publish() method sends the message to all the listeners that are listening to the particular address.

The send() method sends the message to any of the verticles listening to the particular address.

The receiver of the message is decided by Vert.X. At the time of writing the Vert.x docs say that a verticle is chosen using a “non-strict round-robin” algorithm. This basically means that Vert.x will try to distribute the messages evenly among the listening verticles. This is useful for distributing the workload over multiple verticles (e.g. threads or CPUs).

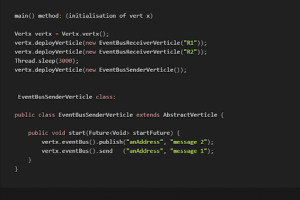

The below example shows two event bus consumers(listeners) and one event bus sender. The sender sends two messages to the address specified. The first message is sent via the publishing () method, so both consumers receive the message. The second message is sent via the send() method, so only one of the consumers will receive the message.

Worker Verticle

At the beginning of this article, we deployed our service on a single server verticle. This is an Event Loop verticle that did all of its processing using executeBlocking. Behind the scenes, this method launches a worker thread to execute the code you give it. This thread is selected from a worker pool which every event loop verticle has. We can configure this pool when we deploy using the setWorkerPoolSize option in DeploymentOptions.

This helps us to process our requests asynchronously. It does, however, connect our business logic to the server, limiting our scaling and deployment options.

So, worker Verticle is designed to execute blocking code. Unlike the regular verticles that run on the event loop thread, workers run on worker threads from the preconfigured pool. Since they are not part of the event loop, they can block while doing their processing.The Vert.X event bus allows them to communicate with other verticles.

Now the cool part is that we can deploy them completely independent of each other. In fact, we can deploy more instances written in a completely different language running in a different machine. It encapsulates all the business logic and completely segregates it from our server.

Polyglot

Vert.X supports Polyglot verticles which means that they can be deployed in multiple languages and still can be connected with each other.

For eg: we can write a java based verticle to send messages (publish, send) and a Python or Scala-based verticle to listen to those messages.

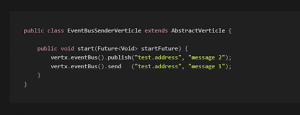

Suppose we have a message sender verticle class,

This Java verticle class sends a message via the publish() and send() methods.

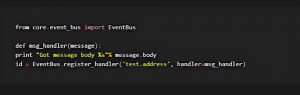

We can register a Python Verticle class to receive this event

It’s as simple as that, The handler will receive any message sent to that address. The object passed into that handler is an instance of Core.Message. The body of the message is available via the body attribute.

The return value of the register_handler is a unique handler id that can be used later to unregister the handler.

Clustering across multiple Nodes

A cluster manager is used in Vert.X for a variety of purposes, including:

- Vert.X node discovery and group membership in a cluster

- Keeping a list of topic subscribers organized by cluster (so we know which nodes are interested in which event bus address)

- Support for distributed maps Distributed locks

- Distributed counters

HazelCast is the default Cluster manager in Vert.X.

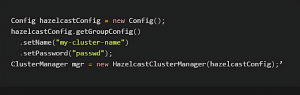

If we are embedding Vert.X, we can specify the cluster manager programmatically by specifying it on the options when we create our Vert.X instance. As an example: If we want to provide a configuration, we can provide it programmatically when initialising the cluster manager. The default constructor of HazelcastClusterManager takes in a parameter of the configuration file.

If we want to provide a configuration, we can provide it programmatically when initialising the cluster manager. The default constructor of HazelcastClusterManager takes in a parameter of the configuration file.

If you would like to know more about our services regarding vert. x-based microservices, click here.

Disclaimer: The opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Dexlock.